E-readers and tablets are becoming more popular as such technologies improve, but research suggests that reading on paper still boasts unique advantages. From the Scientific American magazine (reproduced with permission)

15 August 2013 – In a viral YouTube video from October 2011 a one-year-old girl sweeps her fingers across an iPad’s touchscreen, shuffling groups of icons. In the following scenes she appears to pinch, swipe and prod the pages of paper magazines as though they too were screens. When nothing happens, she pushes against her leg, confirming that her finger works just fine—or so a title card would have us believe.

The girl’s father, Jean-Louis Constanza, presents “A Magazine Is an iPad That Does Not Work” as naturalistic observation—a Jane Goodall among the chimps moment—that reveals a generational transition. “Technology codes our minds,” he writes in the video’s description. “Magazines are now useless and impossible to understand, for digital natives”—that is, for people who have been interacting with digital technologies from a very early age.

Perhaps his daughter really did expect the paper magazines to respond the same way an iPad would. Or maybe she had no expectations at all—maybe she just wanted to touch the magazines. Babies touch everything. Young children who have never seen a tablet like the iPad or an e-reader like the Kindle will still reach out and run their fingers across the pages of a paper book; they will jab at an illustration they like; heck, they will even taste the corner of a book. Today’s so-called digital natives still interact with a mix of paper magazines and books, as well as tablets, smartphones and e-readers; using one kind of technology does not preclude them from understanding another.

Nevertheless, the video brings into focus an important question: How exactly does the technology we use to read change the way we read? How reading on screens differs from reading on paper is relevant not just to the youngest among us, but to just about everyone who reads—to anyone who routinely switches between working long hours in front of a computer at the office and leisurely reading paper magazines and books at home; to people who have embraced e-readers for their convenience and portability, but admit that for some reason they still prefer reading on paper; and to those who have already vowed to forgo tree pulp entirely. As digital texts and technologies become more prevalent, we gain new and more mobile ways of reading—but are we still reading as attentively and thoroughly? How do our brains respond differently to onscreen text than to words on paper? Should we be worried about dividing our attention between pixels and ink or is the validity of such concerns paper-thin?

Since at least the 1980s researchers in many different fields—including psychology, computer engineering, and library and information science—have investigated such questions in more than one hundred published studies. The matter is by no means settled. Before 1992 most studies concluded that people read slower, less accurately and less comprehensively on screens than on paper. Studies published since the early 1990s, however, have produced more inconsistent results: a slight majority has confirmed earlier conclusions, but almost as many have found few significant differences in reading speed or comprehension between paper and screens. And recent surveys suggest that although most people still prefer paper—especially when reading intensively—attitudes are changing as tablets and e-reading technology improve and reading digital books for facts and fun becomes more common. In the U.S., e-books currently make up between 15 and 20 percent of all trade book sales.

Even so, evidence from laboratory experiments, polls and consumer reports indicates that modern screens and e-readers fail to adequately recreate certain tactile experiences of reading on paper that many people miss and, more importantly, prevent people from navigating long texts in an intuitive and satisfying way. In turn, such navigational difficulties may subtly inhibit reading comprehension. Compared with paper, screens may also drain more of our mental resources while we are reading and make it a little harder to remember what we read when we are done. A parallel line of research focuses on people’s attitudes toward different kinds of media. Whether they realize it or not, many people approach computers and tablets with a state of mind less conducive to learning than the one they bring to paper.

“There is physicality in reading,” says developmental psychologist and cognitive scientist Maryanne Wolf of Tufts University, “maybe even more than we want to think about as we lurch into digital reading—as we move forward perhaps with too little reflection. I would like to preserve the absolute best of older forms, but know when to use the new.”

Navigating textual landscapes

Understanding how reading on paper is different from reading on screens requires some explanation of how the brain interprets written language. We often think of reading as a cerebral activity concerned with the abstract—with thoughts and ideas, tone and themes, metaphors and motifs. As far as our brains are concerned, however, text is a tangible part of the physical world we inhabit. In fact, the brain essentially regards letters as physical objects because it does not really have another way of understanding them. As Wolf explains in her book Proust and the Squid, we are not born with brain circuits dedicated to reading. After all, we did not invent writing until relatively recently in our evolutionary history, around the fourth millennium B.C. So the human brain improvises a brand-new circuit for reading by weaving together various regions of neural tissue devoted to other abilities, such as spoken language, motor coordination and vision.

Some of these repurposed brain regions are specialized for object recognition—they are networks of neurons that help us instantly distinguish an apple from an orange, for example, yet classify both as fruit. Just as we learn that certain features—roundness, a twiggy stem, smooth skin—characterize an apple, we learn to recognize each letter by its particular arrangement of lines, curves and hollow spaces. Some of the earliest forms of writing, such as Sumerian cuneiform, began as characters shaped like the objects they represented—a person’s head, an ear of barley, a fish. Some researchers see traces of these origins in modern alphabets: C as crescent moon, S as snake. Especially intricate characters—such as Chinese hanzi and Japanese kanji—activate motor regions in the brain involved in forming those characters on paper: The brain literally goes through the motions of writing when reading, even if the hands are empty. Researchers recently discovered that the same thing happens in a milder way when some people read cursive.

Beyond treating individual letters as physical objects, the human brain may also perceive a text in its entirety as a kind of physical landscape. When we read, we construct a mental representation of the text in which meaning is anchored to structure. The exact nature of such representations remains unclear, but they are likely similar to the mental maps we create of terrain—such as mountains and trails—and of man-made physical spaces, such as apartments and offices. Both anecdotally and in published studies, people report that when trying to locate a particular piece of written information they often remember where in the text it appeared. We might recall that we passed the red farmhouse near the start of the trail before we started climbing uphill through the forest; in a similar way, we remember that we read about Mr. Darcy rebuffing Elizabeth Bennett on the bottom of the left-hand page in one of the earlier chapters.

In most cases, paper books have more obvious topography than onscreen text. An open paperback presents a reader with two clearly defined domains—the left and right pages—and a total of eight corners with which to orient oneself. A reader can focus on a single page of a paper book without losing sight of the whole text: one can see where the book begins and ends and where one page is in relation to those borders. One can even feel the thickness of the pages read in one hand and pages to be read in the other. Turning the pages of a paper book is like leaving one footprint after another on the trail—there’s a rhythm to it and a visible record of how far one has traveled. All these features not only make text in a paper book easily navigable, they also make it easier to form a coherent mental map of the text.

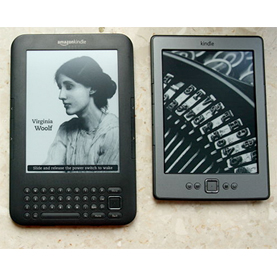

In contrast, most screens, e-readers, smartphones and tablets interfere with intuitive navigation of a text and inhibit people from mapping the journey in their minds. A reader of digital text might scroll through a seamless stream of words, tap forward one page at a time or use the search function to immediately locate a particular phrase—but it is difficult to see any one passage in the context of the entire text. As an analogy, imagine if Google Maps allowed people to navigate street by individual street, as well as to teleport to any specific address, but prevented them from zooming out to see a neighborhood, state or country. Although e-readers like the Kindle and tablets like the iPad re-create pagination—sometimes complete with page numbers, headers and illustrations—the screen only displays a single virtual page: it is there and then it is gone. Instead of hiking the trail yourself, the trees, rocks and moss move past you in flashes with no trace of what came before and no way to see what lies ahead.

“The implicit feel of where you are in a physical book turns out to be more important than we realized,” says Abigail Sellen of Microsoft Research Cambridge in England and co-author of The Myth of the Paperless Office. “Only when you get an e-book do you start to miss it. I don’t think e-book manufacturers have thought enough about how you might visualize where you are in a book.”

At least a few studies suggest that by limiting the way people navigate texts, screens impair comprehension. In a study published in January 2013 Anne Mangen of the University of Stavanger in Norway and her colleagues asked 72 10th-grade students of similar reading ability to study one narrative and one expository text, each about 1,500 words in length. Half the students read the texts on paper and half read them in pdf files on computers with 15-inch liquid-crystal display (LCD) monitors. Afterward, students completed reading-comprehension tests consisting of multiple-choice and short-answer questions, during which they had access to the texts. Students who read the texts on computers performed a little worse than students who read on paper.

Based on observations during the study, Mangen thinks that students reading pdf files had a more difficult time finding particular information when referencing the texts. Volunteers on computers could only scroll or click through the pdfs one section at a time, whereas students reading on paper could hold the text in its entirety in their hands and quickly switch between different pages. Because of their easy navigability, paper books and documents may be better suited to absorption in a text. “The ease with which you can find out the beginning, end and everything inbetween and the constant connection to your path, your progress in the text, might be some way of making it less taxing cognitively, so you have more free capacity for comprehension,” Mangen says.

Supporting this research, surveys indicate that screens and e-readers interfere with two other important aspects of navigating texts: serendipity and a sense of control. People report that they enjoy flipping to a previous section of a paper book when a sentence surfaces a memory of something they read earlier, for example, or quickly scanning ahead on a whim. People also like to have as much control over a text as possible—to highlight with chemical ink, easily write notes to themselves in the margins as well as deform the paper however they choose.

Because of these preferences—and because getting away from multipurpose screens improves concentration—people consistently say that when they really want to dive into a text, they read it on paper. In a 2011 survey of graduate students at National Taiwan University, the majority reported browsing a few paragraphs online before printing out the whole text for more in-depth reading. A 2008 survey of millennials (people born between 1980 and the early 2000s) at Salve Regina University in Rhode Island concluded that, “when it comes to reading a book, even they prefer good, old-fashioned print”. And in a 2003 study conducted at the National Autonomous University of Mexico, nearly 80 percent of 687 surveyed students preferred to read text on paper as opposed to on a screen in order to “understand it with clarity”.

Surveys and consumer reports also suggest that the sensory experiences typically associated with reading—especially tactile experiences—matter to people more than one might assume. Text on a computer, an e-reader and—somewhat ironically—on any touch-screen device is far more intangible than text on paper. Whereas a paper book is made from pages of printed letters fixed in a particular arrangement, the text that appears on a screen is not part of the device’s hardware—it is an ephemeral image. When reading a paper book, one can feel the paper and ink and smooth or fold a page with one’s fingers; the pages make a distinctive sound when turned; and underlining or highlighting a sentence with ink permanently alters the paper’s chemistry. So far, digital texts have not satisfyingly replicated this kind of tactility (although some companies are innovating, at least with keyboards).

Paper books also have an immediately discernible size, shape and weight. We might refer to a hardcover edition of War and Peace as a hefty tome or a paperback Heart of Darkness as a slim volume. In contrast, although a digital text has a length—which is sometimes represented with a scroll or progress bar—it has no obvious shape or thickness. An e-reader always weighs the same, regardless of whether you are reading Proust’s magnum opus or one of Hemingway’s short stories. Some researchers have found that these discrepancies create enough “haptic dissonance” to dissuade some people from using e-readers. People expect books to look, feel and even smell a certain way; when they do not, reading sometimes becomes less enjoyable or even unpleasant. For others, the convenience of a slim portable e-reader outweighs any attachment they might have to the feel of paper books.

Exhaustive reading

Although many old and recent studies conclude that people understand what they read on paper more thoroughly than what they read on screens, the differences are often small. Some experiments, however, suggest that researchers should look not just at immediate reading comprehension, but also at long-term memory. In a 2003 study Kate Garland of the University of Leicester and her colleagues asked 50 British college students to read study material from an introductory economics course either on a computer monitor or in a spiral-bound booklet. After 20 minutes of reading Garland and her colleagues quizzed the students with multiple-choice questions. Students scored equally well regardless of the medium, but differed in how they remembered the information.

Psychologists distinguish between remembering something—which is to recall a piece of information along with contextual details, such as where, when and how one learned it—and knowing something, which is feeling that something is true without remembering how one learned the information. Generally, remembering is a weaker form of memory that is likely to fade unless it is converted into more stable, long-term memory that is “known” from then on. When taking the quiz, volunteers who had read study material on a monitor relied much more on remembering than on knowing, whereas students who read on paper depended equally on remembering and knowing. Garland and her colleagues think that students who read on paper learned the study material more thoroughly more quickly; they did not have to spend a lot of time searching their minds for information from the text, trying to trigger the right memory—they often just knew the answers.

Other researchers have suggested that people comprehend less when they read on a screen because screen-based reading is more physically and mentally taxing than reading on paper. E-ink is easy on the eyes because it reflects ambient light just like a paper book, but computer screens, smartphones and tablets like the iPad shine light directly into people’s faces. Depending on the model of the device, glare, pixilation and flickers can also tire the eyes. LCDs are certainly gentler on eyes than their predecessor, cathode-ray tubes (CRT), but prolonged reading on glossy self-illuminated screens can cause eyestrain, headaches and blurred vision. Such symptoms are so common among people who read on screens—affecting around 70 percent of people who work long hours in front of computers—that the American Optometric Association officially recognizes computer vision syndrome.

Erik Wästlund of Karlstad University in Sweden has conducted some particularly rigorous research on whether paper or screens demand more physical and cognitive resources. In one of his experiments 72 volunteers completed the Higher Education Entrance Examination READ test—a 30-minute, Swedish-language reading-comprehension exam consisting of multiple-choice questions about five texts averaging 1,000 words each. People who took the test on a computer scored lower and reported higher levels of stress and tiredness than people who completed it on paper.

In another set of experiments 82 volunteers completed the READ test on computers, either as a paginated document or as a continuous piece of text. Afterward researchers assessed the students’ attention and working memory, which is a collection of mental talents that allow people to temporarily store and manipulate information in their minds. Volunteers had to quickly close a series of pop-up windows, for example, sort virtual cards or remember digits that flashed on a screen. Like many cognitive abilities, working memory is a finite resource that diminishes with exertion.

Although people in both groups performed equally well on the READ test, those who had to scroll through the continuous text did not do as well on the attention and working-memory tests. Wästlund thinks that scrolling—which requires a reader to consciously focus on both the text and how they are moving it—drains more mental resources than turning or clicking a page, which are simpler and more automatic gestures. A 2004 study conducted at the University of Central Florida reached similar conclusions.

Attitude adjustments

An emerging collection of studies emphasizes that in addition to screens possibly taxing people’s attention more than paper, people do not always bring as much mental effort to screens in the first place. Subconsciously, many people may think of reading on a computer or tablet as a less serious affair than reading on paper. Based on a detailed 2005 survey of 113 people in northern California, Ziming Liu of San Jose State University concluded that people reading on screens take a lot of shortcuts—they spend more time browsing, scanning and hunting for keywords compared with people reading on paper, and are more likely to read a document once, and only once.

When reading on screens, people seem less inclined to engage in what psychologists call metacognitive learning regulation—strategies such as setting specific goals, rereading difficult sections and checking how much one has understood along the way. In a 2011 experiment at the Technion–Israel Institute of Technology, college students took multiple-choice exams about expository texts either on computers or on paper. Researchers limited half the volunteers to a meager seven minutes of study time; the other half could review the text for as long as they liked. When under pressure to read quickly, students using computers and paper performed equally well. When managing their own study time, however, volunteers using paper scored about 10 percentage points higher. Presumably, students using paper approached the exam with a more studious frame of mind than their screen-reading peers, and more effectively directed their attention and working memory.

Perhaps, then, any discrepancies in reading comprehension between paper and screens will shrink as people’s attitudes continue to change. The star of “A Magazine Is an iPad That Does Not Work” is three-and-a-half years old today and no longer interacts with paper magazines as though they were touchscreens, her father says. Perhaps she and her peers will grow up without the subtle bias against screens that seems to lurk in the minds of older generations. In current research for Microsoft, Sellen has learned that many people do not feel much ownership of e-books because of their impermanence and intangibility: “They think of using an e-book, not owning an e-book,” she says. Participants in her studies say that when they really like an electronic book, they go out and get the paper version. This reminds Sellen of people’s early opinions of digital music, which she has also studied. Despite initial resistance, people love curating, organizing and sharing digital music today. Attitudes toward e-books may transition in a similar way, especially if e-readers and tablets allow more sharing and social interaction than they currently do. Books on the Kindle can only be loaned once, for example.

To date, many engineers, designers and user-interface experts have worked hard to make reading on an e-reader or tablet as close to reading on paper as possible. E-ink resembles chemical ink and the simple layout of the Kindle’s screen looks like a page in a paperback. Likewise, Apple’s iBooks attempts to simulate the overall aesthetic of paper books, including somewhat realistic page-turning. Jaejeung Kim of KAIST Institute of Information Technology Convergence in South Korea and his colleagues have designed an innovative and unreleased interface that makes iBooks seem primitive. When using their interface, one can see the many individual pages one has read on the left side of the tablet and all the unread pages on the right side, as if holding a paperback in one’s hands. A reader can also flip bundles of pages at a time with a flick of a finger.

But why, one could ask, are we working so hard to make reading with new technologies like tablets and e-readers so similar to the experience of reading on the very ancient technology that is paper? Why not keep paper and evolve screen-based reading into something else entirely? Screens obviously offer readers experiences that paper cannot. Scrolling may not be the ideal way to navigate a text as long and dense asMoby Dick, but the New York Times, Washington Post, ESPN and other media outlets have created beautiful, highly visual articles that depend entirely on scrolling and could not appear in print in the same way. Some Web comics and infographics turn scrolling into a strength rather than a weakness. Similarly, Robin Sloan has pioneered the tap essay for mobile devices. The immensely popular interactive Scale of the Universe tool could not have been made on paper in any practical way. New e-publishing companies like Atavist offer tablet readers long-form journalism with embedded interactive graphics, maps, timelines, animations and sound tracks. And some writers are pairing up with computer programmers to produce ever more sophisticated interactive fiction and nonfiction in which one’s choices determine what one reads, hears and sees next.

When it comes to intensively reading long pieces of plain text, paper and ink may still have the advantage. But text is not the only way to read.